Diffusion Language Models as Super Data Learners - 扩散语言模型 -超级数据学习能力及其对同期研究的批判性评估

Is DLM the future of LLMs?

Diffusion Language Models are Super Data Learners

EN

Executive Summary: DLMs as "Super Learners" in an Era of Data Scarcity

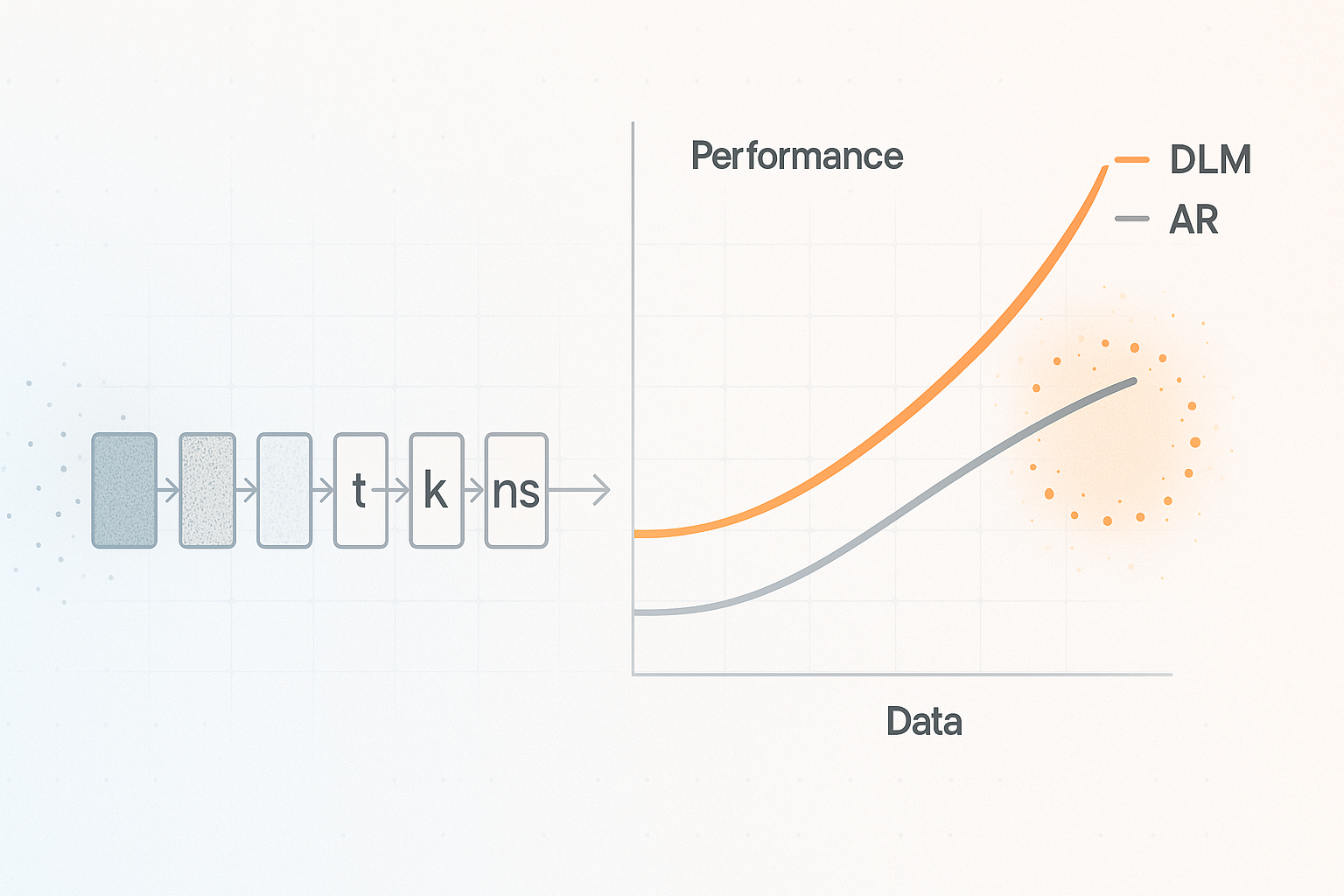

The core thesis from the Jinjie Ni team (published on August 9, 2025) posits that in the current era characterized by the increasing scarcity of high-quality data and a relative abundance of computational power, DLMs demonstrate remarkable "super data learning" capabilities compared to traditional Autoregressive models. This advantage is primarily attributable to DLMs' unique bidirectional modeling approach and the high computational density inherent in their training process, which collectively enable them to more efficiently extract information from limited datasets.

Concurrently, this research also serves as a critical assessment, meticulously identifying several deficiencies in a contemporaneous paper 1 regarding its loss function definition, evaluation metric selection, and experimental setup. The Jinjie Ni team contends that certain conclusions presented in that paper concerning DLMs are materially misleading.

In essence, this paper posits that DLMs represent a more promising trajectory for the future development of AI models, particularly within a landscape where data is no longer an inexhaustible resource as it once was.

CN

核心观点概览:DLMs是数据稀缺时代的“超级学习者”

Jinjie Ni团队(发布于2025年8月9日)的核心观点是:在当前高质量数据日益稀缺,而计算能力却相对过剩的时代,DLMs相比传统的AR模型,展现出惊人的“超级数据学习”能力。这主要得益于DLMs独特的双向建模方式和其训练过程中伴随的高计算密度,使得它们能更高效地从有限数据中挖掘信息。

同时,该研究还扮演了“批判者”的角色,严谨地指出了同期另一篇论文1在损失函数定义、评估指标选择和实验设置上的多处缺陷,认为其关于DLMs的某些结论存在严重误导。

简而言之,这篇文章认为DLMs代表了未来AI模型发展的一个更具前景的方向,尤其是在数据不再像过去那样“取之不尽”的背景下。